Think you can build your own shadow metering solution? You probably can. The real question is: should you?

Building a utility data reporting and analytics system in-house might seem like a solid plan. But in practice, it’s a minefield of technical complexity, hidden costs, and unexpected delays, especially when scaling across a portfolio.

And here’s the thing: if you’ve even considered building your own system, you’re probably one of the few teams with the technical chops to actually pull it off.

It seems straightforward: install a few meters, connect to a database, and voilà — insightful data, ready to use.

What often gets overlooked are all the problems that can emerge during, and after, a deployment—and what you really need to consider before going down that road.

What to consider about the hardware

Here’s where things usually go sideways first: hardware.

There are hundreds, maybe thousands, of meter options. Each comes with tradeoffs: price, accuracy, voltage and amperage limits, data and networking options, and more. Choosing the right one can take months of research and comparison.

Some meters require months of validation, matching reads against tenant utility bills to prove accuracy, which defeats the whole purpose if you’re trying to stop chasing tenants for data in the first place. Others can deliver accuracy out of the box if they’re specced and deployed correctly.

Will you get access to meters directly from manufacturers, or will you be buying retail? If it’s the latter, go ahead and add a hefty markup to your hardware budget. Planning to meter gas and water too? That’s an entirely new layer of complexity.

Provisioning is another hidden landmine. Pre-configured meters arrive ready to plug into your system. If you skip that step, someone will be stuck provisioning them on-site, often with inconsistent settings or no access to the backend. Now try debugging that at scale.

Then comes the installation.

Who’s going to install them? And how will they know where to install them in specific buildings? Are they prepared to verify data is flowing correctly before they leave—or will they need to come back, delaying the project?

Different regions, different utilities, panel types, and service configurations all introduce new variables. Are your installers ready for that too?

Who’s coordinating all this across dozens of buildings, utilities, and local teams? Who’s ensuring PMs and tenants are even aware that work is happening? Who's on the hook when something slips?

If you’re lucky, the installation won’t require any downtime in your building. But if your team isn’t used to this work—you may face temporary shutdowns of critical systems.

For most tenants, that would be 100% unacceptable.

Choosing the wrong hardware can quietly compromise your entire operation. It’ll give you a false sense that everything’s working until it’s too late to fix.

Meters installed. Now what?

The second major stage of a deployment is connecting the data to where it will actually be useful.

Beyond the technical integration, you need to ensure that data is accurately contextualized—meaning correctly linked to the right physical meter, units of measurement, and time periods.

But the most important thing here is making sure the data can be trusted. And if the data isn’t accurate and verified, it could do more harm than good.

You need a structured QA process — one that runs automated checks, logs anomalies, and prioritizes issues based on severity.

Otherwise, you’re relying on assumptions.

Without this, even technically valid data can produce misleading insights and reports. And if you're using that data to drive decarbonization strategies and reduce emissions, bad data leads to bad decisions.

That kind of quality control isn’t a one-time event. That’s why the next layer is just as important: long-term maintenance.

How are you going to maintain the meters long term? What if they go down or get hit by a forklift?

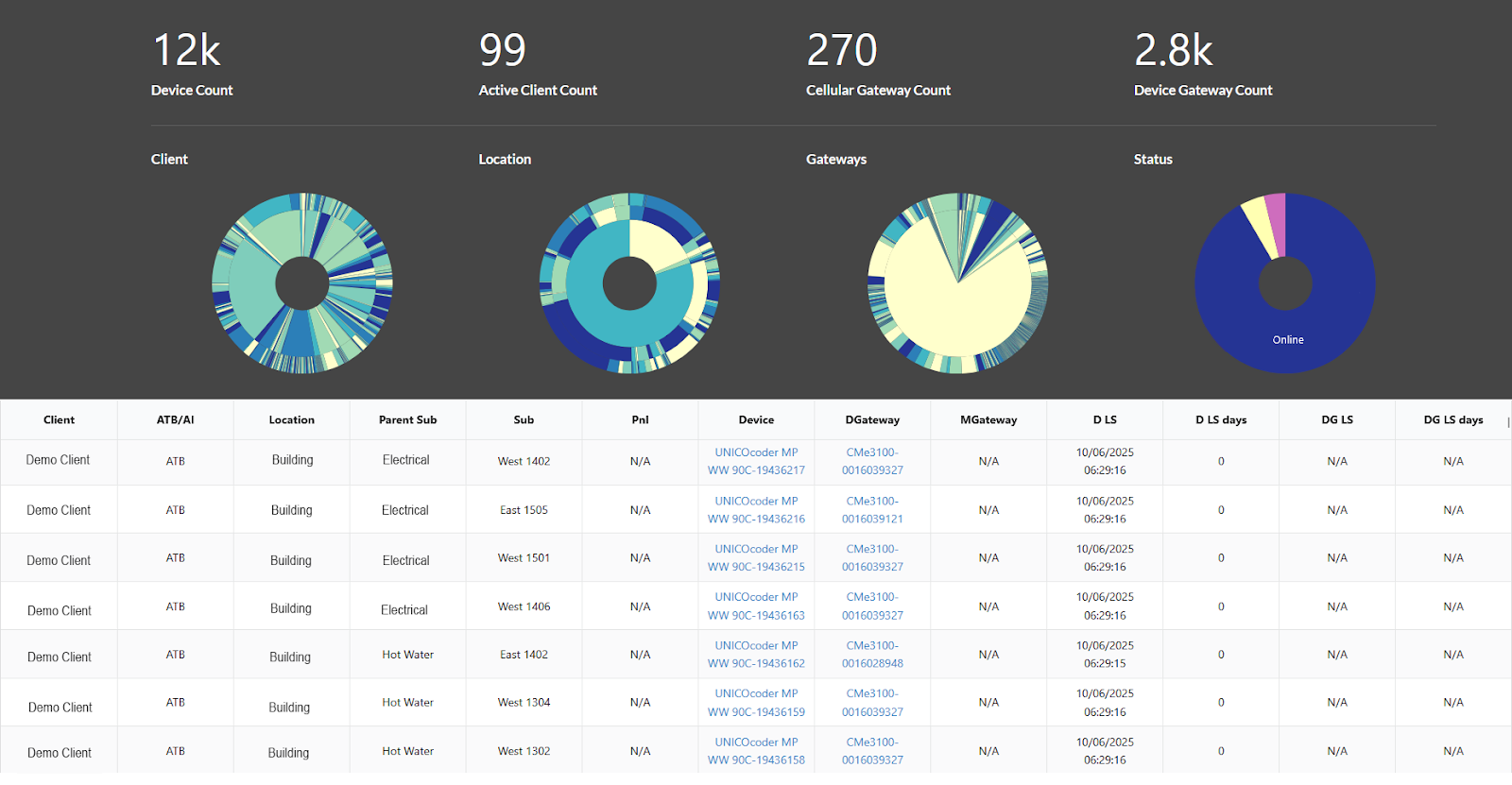

You need real-time monitoring and a system that knows when something's off — whether it's a device failure, a communication issue, or a subtle drift in readings.

At Enertiv, we built our maintenance layer around this principle. Every meter is continuously validated in the background, with anomalies flagged automatically, so issues are resolved before they snowball.

Leveraging Data Beyond Benchmarking

Benchmarking is just the beginning. The real value of utility data lies in what it actually enables.

This part of the conversation often gets overlooked — because pulling it off requires real operational structure, especially at scale.

Consumption data can, for example, provide operational insights to identify inefficient usage patterns and detect failures or equipment running after hours. That translates into real savings, operational efficiency, and measurable progress toward decarbonization goals.

And it goes deeper. With the right structure, utility data opens the door to what we call goldmine services—programs like demand response, energy procurement, community solar, and building certifications that unlock new revenue, new incentives, and new capital sources.

But none of this happens automatically.

Are you going to analyze that data manually, throwing smart people at spreadsheets and hoping for insights?

If your team doesn’t have the DNA to build machine learning and AI algorithms, you’re leaving massive value on the table.

How do you capture just enough detail to drive action — without adding unnecessary complexity?

How do you balance costs with delivering real value — and make that work at scale?

After the analysis, how are you going to present that information to tenants in a way that leads to action? Most landlords barely know their tenants beyond the facility manager.

Are you going to develop a tenant-facing interface?

How will you provide support so they can implement improvements?

Because once you do, the conversation changes. Imagine walking into a lease renewal and saying, “We helped your team save $180,000 this year.”

That’s not leverage, it’s a trump card.

These aren’t hypothetical scenarios, they’re the kinds of advantages that a well-executed utility data strategy can unlock. Now imagine what’s possible when you bring that to scale.

CASE STUDY

Top 10 Global Asset Management Firm deploys Enertiv to get 100% utility data coverage across 100+ industrial sites

Light at the end of the tunnel

If you’ve made it this far, you’ve likely realized that implementing a utility data collection system in-house involves much more than installing meters and connecting APIs.

Yes, it’s possible to build it from scratch.

But it takes time, resources, and a learning curve that’s hard to fully anticipate.

Unless this is your core business, you’ll likely spend years learning lessons we’ve already paid for — through failed installs, messy integrations, and countless conversations with teams with real-world demands.

What may seem like a straightforward checklist today was, for us, a long path marked by challenges and meaningful breakthroughs.

Today’s sustainability leadership depends on speed and data precision. If you’re serious about hitting decarbonization targets, every delay in collecting accurate utility data is a missed opportunity.

That’s why going through this entire build process internally can actively slow your energy transition.

You don’t need to start from zero to reach net zero. You just need to avoid the mistakes that stall everyone else.